Artificial Intelligence has made outstanding progress in recent years, powering everything from chatbots and recommendation systems to self-driving cars. But as powerful as AI can be, it also faces some serious challenges. One of the most common and misunderstood issues in building AI models is overfitting.

Understanding what overfitting is, why it happens, and why it’s a problem is essential for anyone working with or learning about AI. Signing up for an Artificial Intelligence Course in Bangalore will enable you to understand these essential concepts and develop more efficient AI models, no matter your level of expertise.

What Does Overfitting Mean in AI?

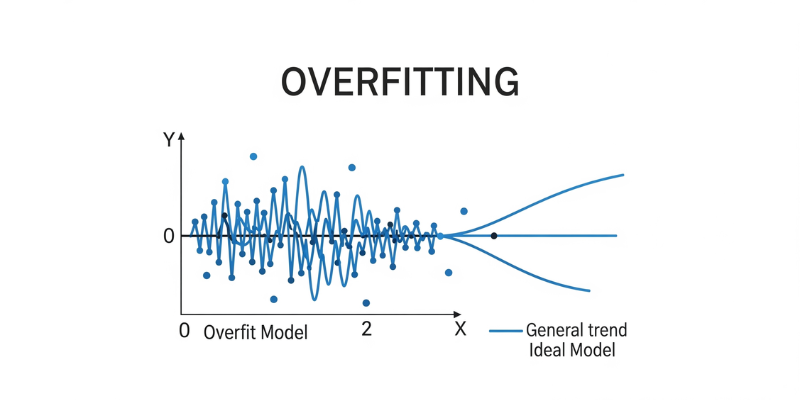

Overfitting happens when an AI model learns the training data too well, including the noise and random patterns that do not represent the real-world problem. Instead of learning the general trends, the model memorizes the specific examples in the dataset. As a result, it performs very well on the training data but poorly on new or unseen data.

Picture attempting to instruct a child on identifying cats by presenting them with just a handful of images of fluffy white cats. If the child thinks all cats must be white and fluffy, they will struggle to identify a black or short-haired cat later. That’s overfitting in a nutshell; the model becomes too focused on the limited data it was trained on and fails to generalize.

Why Overfitting Happens in AI Models

Overfitting can occur for several reasons. One major cause is too much complexity in the model. When an AI model has many layers or parameters, it has the power to fit even the tiniest details of the training data, whether those details are meaningful or not.

Another common cause is not having enough data. With a small dataset, there’s not enough variety to teach the model the broader patterns. In such cases, the model may simply memorize the few examples it sees.

Poor data quality also contributes to overfitting. If the dataset contains errors, duplicates, or irrelevant information, the model might treat these as important features, leading to inaccurate predictions later.

Why Overfitting is a Problem in Artificial Intelligence

The goal of AI is not to perform well only on the data it was trained on. The real test is how well it performs in real-world situations or on new, unseen data. When a model is overfitted, it lacks flexibility and makes poor predictions in the real world.

Overfitting reduces the reliability of AI systems. For example, an overfitted fraud detection system may miss new types of fraud because it only recognizes patterns it has already seen. This can lead to financial losses or security breaches.

In areas like healthcare, overfitting becomes even more serious. A medical AI tool that only performs well on a specific group of patients but fails to detect patterns in others could lead to wrong diagnoses and affect patient safety.

Overfitting also wastes computational resources. Time and energy go into training complex models that might look accurate during testing but fail when deployed. This can slow down AI development and damage trust in AI solutions.

How to Detect and Prevent Overfitting

The most common way to detect overfitting is by comparing a model’s performance on training data with its performance on validation or test data. If there is a large gap where accuracy is high on the training set but low on the test set, overfitting is likely the issue. Understanding how to spot and fix such problems is something you can learn in detail through a quality Artificial Intelligence Course in Mumbai.

To prevent overfitting, AI developers use techniques like cross-validation, simplifying the model, and gathering more high-quality data. Regularization techniques and dropout layers in neural networks can also help by forcing the model to focus on the most important patterns.

Overfitting is one of the biggest obstacles in developing reliable and trustworthy AI systems. It turns models into specialists in their training data rather than general problem-solvers. By understanding why overfitting happens and how to detect it early, AI developers can build smarter, more adaptable systems that work well in the real world.

In a swiftly evolving domain such as artificial intelligence, guaranteeing that models can apply their knowledge outside of their training settings is not merely a technical objective; it represents a crucial development for the ethical and practical use of AI.

Freight Forwarding Services in Canada: How Progressive Cargo Simplifies Global Logistics

Freight forwarding plays a crucial role in today’s interconnected global economy. Business…